All you wanted to know about Apache Spark and Scala

Category: Apache spark and scala, General Posted:Jun 08, 2016 By: Alvera AntoBig Data is an accepted challenge across industries and has the geeks conceiving a variety of application solutions. Before the Hadoop hangover could escape, Apache Spark was pitched forward as a better Big Data processing framework. Some of the benefits of Sparks hint at faster processing, performing deeper analysis and the immense ease at implementing.

While the dire need of Big Data consultants continues to rise, Spark promises a lucrative career. Just some food for Hadoop v Sparks arguments, here’s a quick insight.

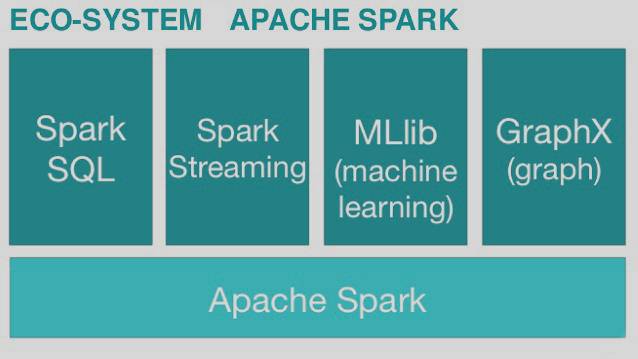

The Spark ecosystem

The Spark ecosystem supports a list of expandable capabilities. A high-level view of the entire ecosystem includes the following main components –

Spark Streaming – This is the main component where data streaming and processing is executed. A series of RDD’s are used to efficiently process huge volumes of real-time data.

Spark SQL – The component allows executing SQL queries on Spark datasets to capture and visualize selective information. It supports different formats (such as JSON and Parquets) and exposes them for ad-hoc querying.

Spark MLlib – This is the Machine Language Learning library supporting different algorithms for a range of functions. Functions include Classifying, Regressing, Collaborative Filtering and Clustering of data.

Spark GraphX – This is the API that performs parallel computation for Graphs. The different significant operators used here are joinVertices, aggregate Messages and SubGraphs. To simplify analytics for Graphs, an extensive collection of Graph algorithms is also available.

The Role of Scala

The Spark framework supports a number of programming languages with Scala hailed as the best of them all. Purely Object Oriented, every operation in Scala is supported by a method call. Add to it the advanced component architectures supported through classes. Running on JVM, some features of Scala are –

- Mixins – Interfaces especially for implementations

- Supports Operator Overloading

- Syntactic flexibility is high

- Also offers the best of capabilities from Functional programming

Why Sparks?

Being an Open Source yet powerful processing engine, Sparks promises unmatched speed for interactive analytics. Already stamped as the next big thing near to Hadoop, some of the benefits of Sparks are –

- Supports multiple programming environments including Java, Scala and Python

- Highly Fault tolerant – Recovers lost data with no additional configuration needed

- Can integrate well with existing Hadoop data; runs on Hadoop’s cluster manager

- Supports real time superlative streaming of data

Should I learn Sparks or Hadoop?

The Hadoop v Sparks debate has the Big Data consultants penetrating deeper into the insights and have the answer. While both continue to grow and impress, Sparks is relatively new and offers more opportunities than Hadoop. It is faster, lighter and more accurate with end results.

Thus, pursuing a course with Apache Sparks can land you a lucrative career offer with minimum initial competition.

All set to learn Apache Sparks? Look out for a trusted institute that can help attain a strong foundation with the basics and master the subject.

99999999 (Toll Free)

99999999 (Toll Free)  +91 9999999

+91 9999999