Difference between RDBMS and HBase

Category: Hadoop Posted:Jun 21, 2017 By: Serena Josh

Hadoop and RDBMS are varying concepts of processing, retrieving and storing the data or information. While Hadoop is an open-source Apache project, RDBMS stands for Relational Database Management System. Hadoop framework has been written in Java which makes it scalable and makes it able to support applications that call for high performance standards. Hadoop framework enables the storage of large amounts of data on files systems of multiple computers. Hadoop is configured to allow scalability from a single computer node to several thousands of nodes or independent workstations in a manner that the individual nodes utilize local computer storage CPU processing power and memory.

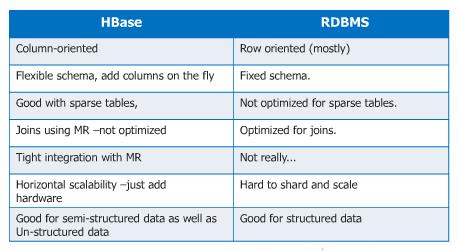

Database Management Systems focus on the data storage in table form which includes columns and rows. SQL is utilized to retrieve needed data which is stored in such tables. The RDBMS concept stores relationships between such tables in various forms so that one column of entries of a particular table will act as a reference for another table. Such column values are known as primary keys and foreign keys with the keys being used to reference other existing tables so that the appropriate data can be related and also be retrieved by combining such tables using SQL queries as required. The tables and relationships can be altered by integrating relevant tables using SQL queries.

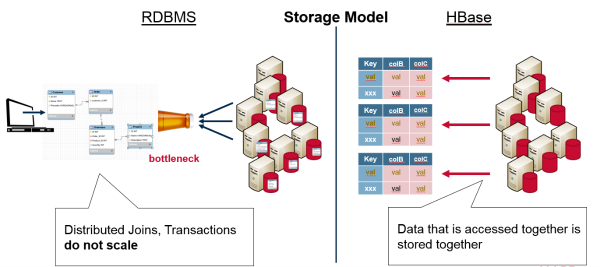

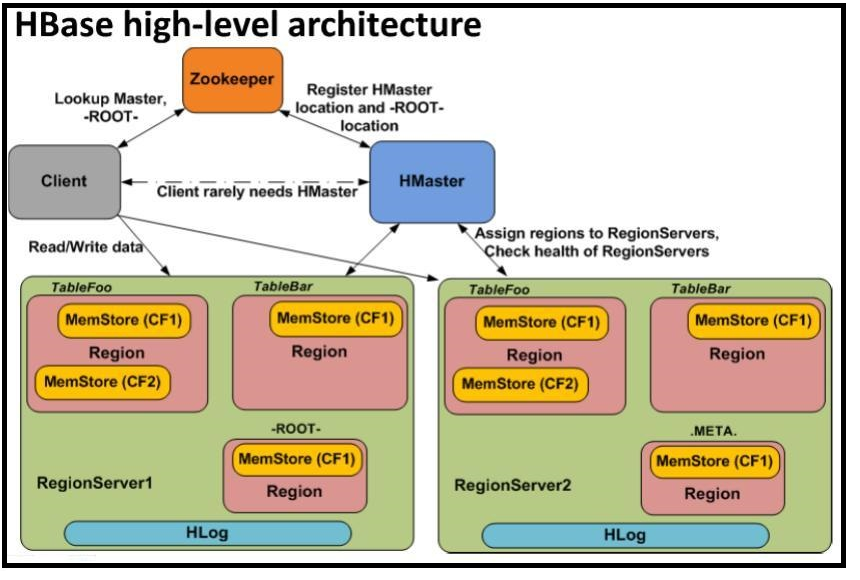

It is important to remember that Hadoop is not an actual database, although HBase and Impala can be considered as databases. Hadoop is just a file system known as Hadoop File System (HDFS) with built-in redundancy and parallelism. Traditional databases or RDBMS’ have “ACID” properties which is an acronym that stands for Atomicity, Consistency, Isolation and Durability. These properties are not found at all in Hadoop. For instance if one has to script code for taking money from one particular bank to deposit the same into another bank then, they will have to painstakingly code all scenarios that may occur such as what happens when money is taken out but a failure results before it is moved into another account.

However, Hadoop brings a lot to the table too, offering massive scaling capability in terms of processing power and storage at costs which are relatively much lower when compared to the sky high costs of an RDBMS. Hadoop also comes packed with amazing parallel processing capabilities, with one being able to run jobs in parallel to execute number crunching in large volumes.

When it comes to deciding the efficacy of traditional databases in terms if working with unstructured data, it is not so simple. Several applications that are created utilizing traditional RDBMS which use a lot of unstructured data or video files or PDFs seem to work well, as vouched for by market experts. Usually RDBMS is meant to handle large chunks of data in its cache for accelerated processing while simultaneously maintaining read consistency across sessions. Hadoop does a much better job of utilizing the memory cache for data processing without providing any other aspects such as read consistency. Hive SQL has always known to be several times slower than SQL which can be run in traditional databases. Users who go in thinking that SQL in Hive is speedier than in databases will be inn for a huge let-down since it will not scale to any extent whatsoever for complicated analytics. While Hadoop excels at parallel processing problems such as finding a group of keywords in large sets of documents, RDBMS implementations will be much faster for data sets which are comparable.

You may also like to read Top 5 Big Data Trends 2017

It will come as no surprise to any tech enthusiast that the volumes of data being generated are explosive with seconds adding up to huge data volumes that need processing. This means that the traditional dates that were developed from a single CPU and RAM cache premise will not be able to support business needs of an enterprise in the future. If one weighs the benefits of an old style reports with high consistencies with that of instant reports with reasonable or partially consistent aspects than it is better to go for on that is more current. The next step for evolution for both Hadoop and RDBMS would be to patch up both their short-coming to deliver a satisfactory customer usage experience.

Register here for Live Webinar on HADOOP

Why and when would you choose one over the other?

Hadoop is an excellent way to replace an organization’s tape back-up strategy with it acting as a great Super-fast Tapeless Backup.

Hadoop is great for when one has to sift through endless Gigabytes and Terabytes of data and does not know what to do with the data with one not knowing what to do with the data. Hadoop is very user-friendly since it can be used by people not equipped with the know-how to handle data. There is no workaround to the fact that a user will have to model his/her data, and any exception made in this regard will bring about fundamental problems of great scale.

Hadoop is custom-made for large quantities of structured data and unstructured data is usually rare such as email, or poorly designed log files, twitter feeds and www web pages. Such data is used when users have not put in the time to understand their data. RDBMS are used whenever a consumer wants to manage data, its relationships and when the user cares about data governance and security at large scale budgets and platforms used at such budgets.

Traditional RDBMS is utilized to handle relational data while Hadoop works well with structured as well as unstructured data, supporting multiple serialization and data formats such as Text, Json, Xml, Avro and more. The distribution of Hadoop is done from the ground up with the option of adding more nodes in order to boost capacity.

Go through our Interview Questions Hadoop Developer to crack your next interview.

The only place where problems would arise would be where SQL databases are the only choice. If the data size and type allows for it, with the data type being relational, oone can go ahead with the RDBMS route since it is a time tested method and a matured tech.

When the data size or type is such that one is unable to save it in an RDBMS, then one should select Hadoop as their way out. The perfect example for this would be product catalogue. An automobile would have a much higher number of attributes than a TV which will make it tough to construct a new table as per the product type. Another suitable example is when dealing with machine generated data where the data size puts a large stress on traditional RDBMS – which serves as a classic case of Hadoop coming to the rescue. Another obvious example would be document indexing.

Do check out the tutorial for Beginners:

99999999 (Toll Free)

99999999 (Toll Free)  +91 9999999

+91 9999999