Top Five Best Practices for SAP Master Data Governance

Category: SAP MDG Posted:Mar 24, 2017 By: Ashley Morrison

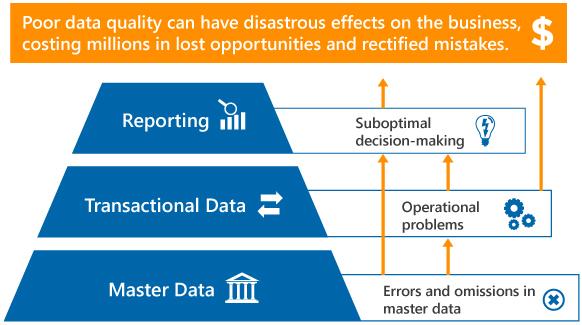

Master Data Governance (MDG) initiates policies and processes for the generation and maintenance of master data. Implementing such policies will bring about trust in the quality and usability of master data.

If organizations hope to institute policies regulating the creation and maintenance of master data, they should follow certain best practices meant to address the challenges in central management of shared master data. Here are the top five such best practices:

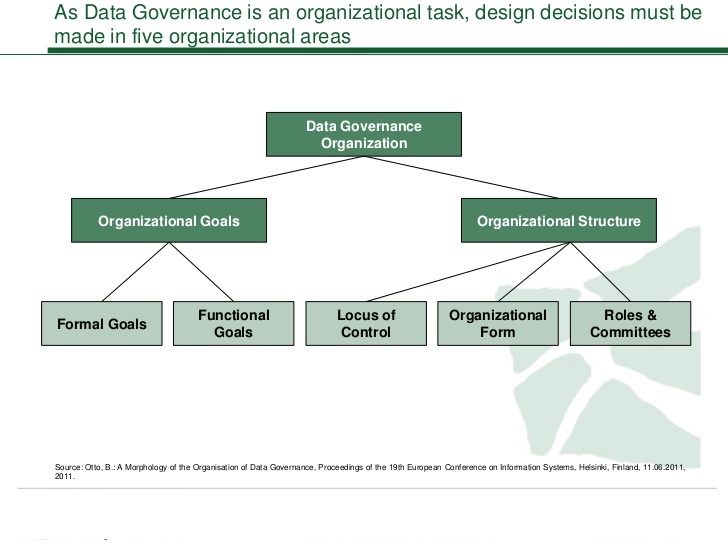

1. Establish a Master Data Governance Team and Operating Model

Organizations need to identify that a majority of active business processes have intersecting function boundaries. Several personnel are involved in the collaborative workflow processes for generating master data along with executing the business processes.

Cross-functional master data processes in enterprises usually generate the issue of process ownership, since organizations are conventionally built around function.

The most crucial best practice that should be implemented is the creation of a central data governance team to ensure that the processes are not impeded by data errors or process failures. This should be initiated and mandated by the executive management and the data governance team should include representatives from every enterprise section. Such a team should also be empowered with the authority to:

- Elucidate and approve policies governing the lifecycle for master data to ensure data quality and usability.

- Alert and inform personnel when data errors or process faults are jeopardizing the data quality and usability.

- Supervise process workflows that touch master data.

- Define and manage business rules for master data.

- Assess and monitor compliance with defined master data policies.

Know more about SAP Master Data Governance

2. Recognize and Map the Generation and Consumption of Master Data

Even though data may only be stored and managed from one centralized location, organizations can still gain from the reuse of data utilizing data sharing managed from within a master data repository.

The quality and usability features of the master data must be multi-purpose, meaning organizations need to ensure cross-functional needs are recognized from the entire set of master data consumers. It also means that those needs will be able to be observed throughout all the data lifecycles associated with the cross-functional processes.

A horizontal view of the workflow processes within an organization is necessary to identify data producers and data consumers. This is a central requirement to properly measure data needs and expectations for quality, usability and availability.

This leads to a need for the next best practice of documenting business process workflows and determination of production and consumption touchpoints for master data. Personnel should be able to connect the assurance of accuracy, usability and consistency to specific master data touchpoints whether one is looking at workflow processes for the creation of shared master data or reviewing processes that consume shared master data.

Documentation of process flow maps enables one to consider all the touchpoints for master data for creation, use and updates along with each workflow activity. Opportunities for inserting, inspection and monitoring that data policies and data quality rules are being observed are provided by points of data creation and updating.

Usage scenarios when reviewed provide traceability for the hierarchy of shared master values enables data dependencies to be identified. Such data dependencies must be closely monitored to ensure consistency in definition, aggregation, and reporting.

As a result, this information production map recognizes the opportunities for establishing governance directives for ensuring compliance with organization data policies.

3. Govern Shared Metadata: Concepts, Data Elements, Reference Data, and Rules

Master Data Governance is driven mainly by inconsistencies and discrepancies related to shared data use, specifically in terms of the features of master data concepts like product and consumer.

It is a very common occurrence to have inconsistencies in reference data sets such as customer category codes and product codes. The overlap and duplication of user-defined code set because of specific issues inside an ERP environment. This is especially true when centralized authority for definition and management of standards for reference data is present.

Erroneous use of overlapping codes can cause incorrect behavior inside of hard-coded applications. Such bugs are hard to pinpoint and highly malicious.

The third best practice for Master Data is aimed at minimizing the negative impact of potential inconsistencies. Governance refers to centralizing supervision and management of shared metadata concentrating on entity concepts like reference data and corresponding code sets, customer or supplier, data quality standards along with rules for validation at different touchpoints in the data lifecycle.

Get trained in SAP Master Data Governance by expert trainers

Most business functions have their definition of terms utilized to refer to master data concepts. Therefore it is advisable to make use of a collaborative process to contrast definitions and settle differences to provide a standardized set of master-concept definitions.

Governance and management of shared metadata include defining and observing data policies for resolution of variance across data elements with identical names. It also includes procedures for normalizing reference data code sets, values, and related mappings. Normalization refers to the tabulation of data sets to eliminate ambiguity in the recall of data (at the slight expense of data duplication)

Normalizing shared master reference data ensures mitigation of the impact of failed processes and repeated reconciliations to be done when there is a mismatch between reporting and the actual operational systems. Finally, there is a generation of consolidated data quality requirements and rules used for validation which can be centralized and governed to ensure consistency in the application of said rules.

4. Institute Policies for Ensuring Quality at Stages of the Data Lifecycle

The underlying value in implementing master data management is the minimization of data variance and inconsistency. Correcting data downstream (post-processing) is a reactive rather than preventive measure and must be avoided and minimized. Such data corrections will go up in the absence of data quality processes aimed at the prevention of errors at the early stages of the data lifecycle.

The fourth best practice is aimed at meeting requirements as specified by collected data requirements. This needs a focused approach to defining data policies and implementing data governance processes. Also needed, will be quality assessment and data discovery and exploration. By evaluating potential bugs within the master data set, these processes will aid in recognizing structural inconsistencies semantic variations in data and code set issues.

Pinpointing potential issues can lead to their resolution with stop-gap controls built into the common master data models utilized for information sharing. This will help minimize the introduction of errors into the environment in the first place.

5. Implement Discrete Approval and Separation of Duties Workflow

The successful implementation of master data governance depends upon untangling the ownership of cross-functional processes within an organization. Clear definition of duties for an employee in a workflow is of paramount importance as a best practice to ensure successful process execution.

This will involve several aspects such as analyzing the relationship between business team members who define workflow processes, and IT teams who develop and execute the application of workflow processes. Another aspect is the classification of processes into different types such as with and without IT involvement. The next feature includes operational aspects of oversight such as review of newly entered items, approving new records, and sign off on completion of specific workflows.

Workflow ownership is a complex challenge and is assigned across different business areas and there needs to be a comprehensive set of policies to oversee the workflow at all stages. This calls for a fifth-best practice aimed at integrating discrete approval of tasks into operational aspects of Master Data Governance.

This can be done through the delineation of oversight as part of an approval process focusing on business use of data not requiring IT intervention.

Conclusion

The successful deployment of ERP solutions can revolutionize the way a company works in terms of increased opportunities, decreased operational costs, an improved level of trust in generated reports. Incorporation of the best practices as mentioned above will ensure operational efficiency and organizational growth and needs to be kept in mind whenever instituting enterprise-wide changes.

For questions or added info on this subject, you can visit our website. At ZaranTech we offer self-paced online training programs on SAP MDG topics. Enroll with us and skyrocket your career.

99999999 (Toll Free)

99999999 (Toll Free)  +91 9999999

+91 9999999